Most of the Tech:Net-crew is traveling up to Vikingskipet already tomorrow to establish the internet-connection and some of the most critical components of the infrastructure. From Saturday morning we’ll be working on getting the core equipment up and running so that we are absolutely sure that we can provide the best and the most stable networking services for our users when they arrive on Wednesday.

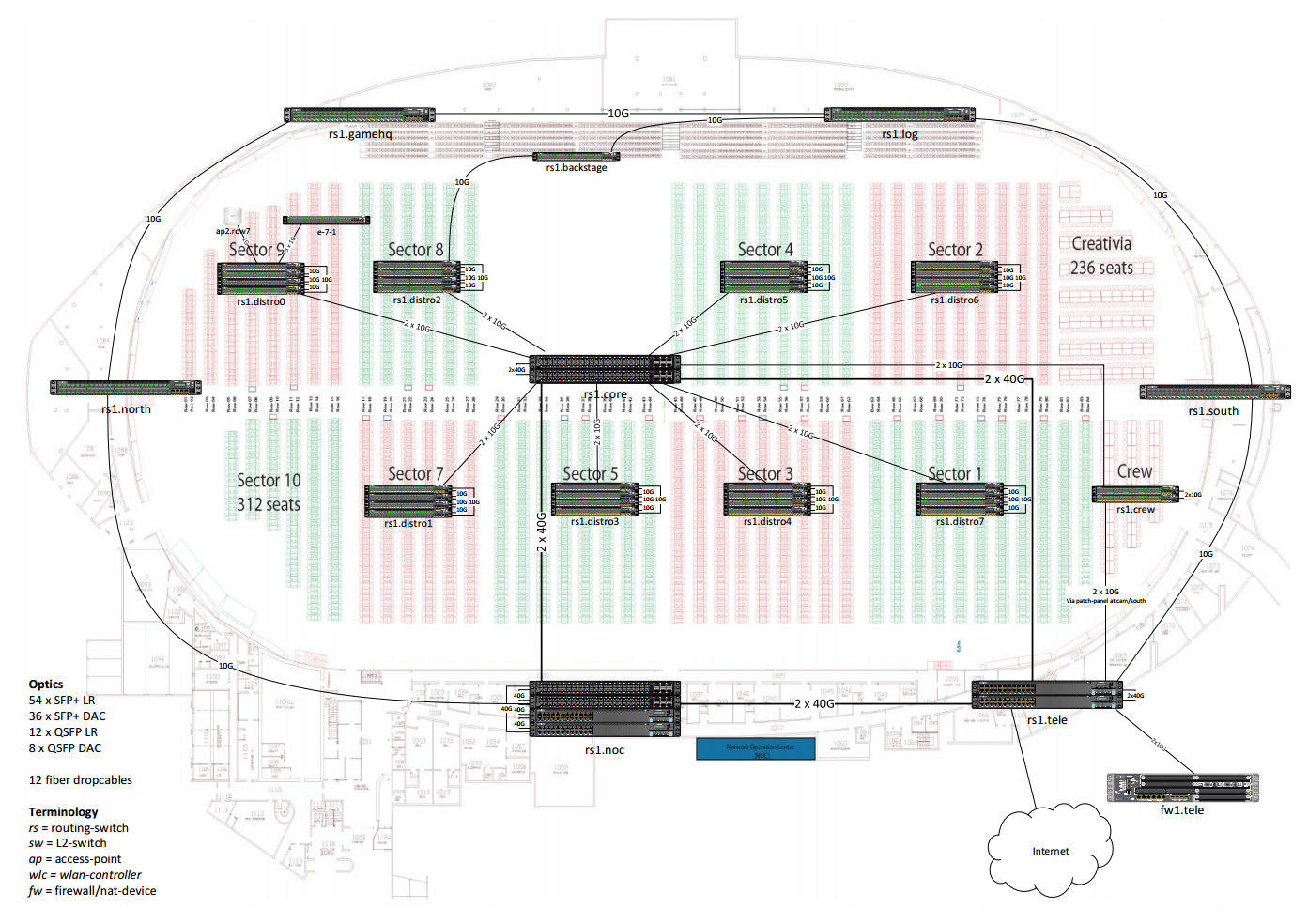

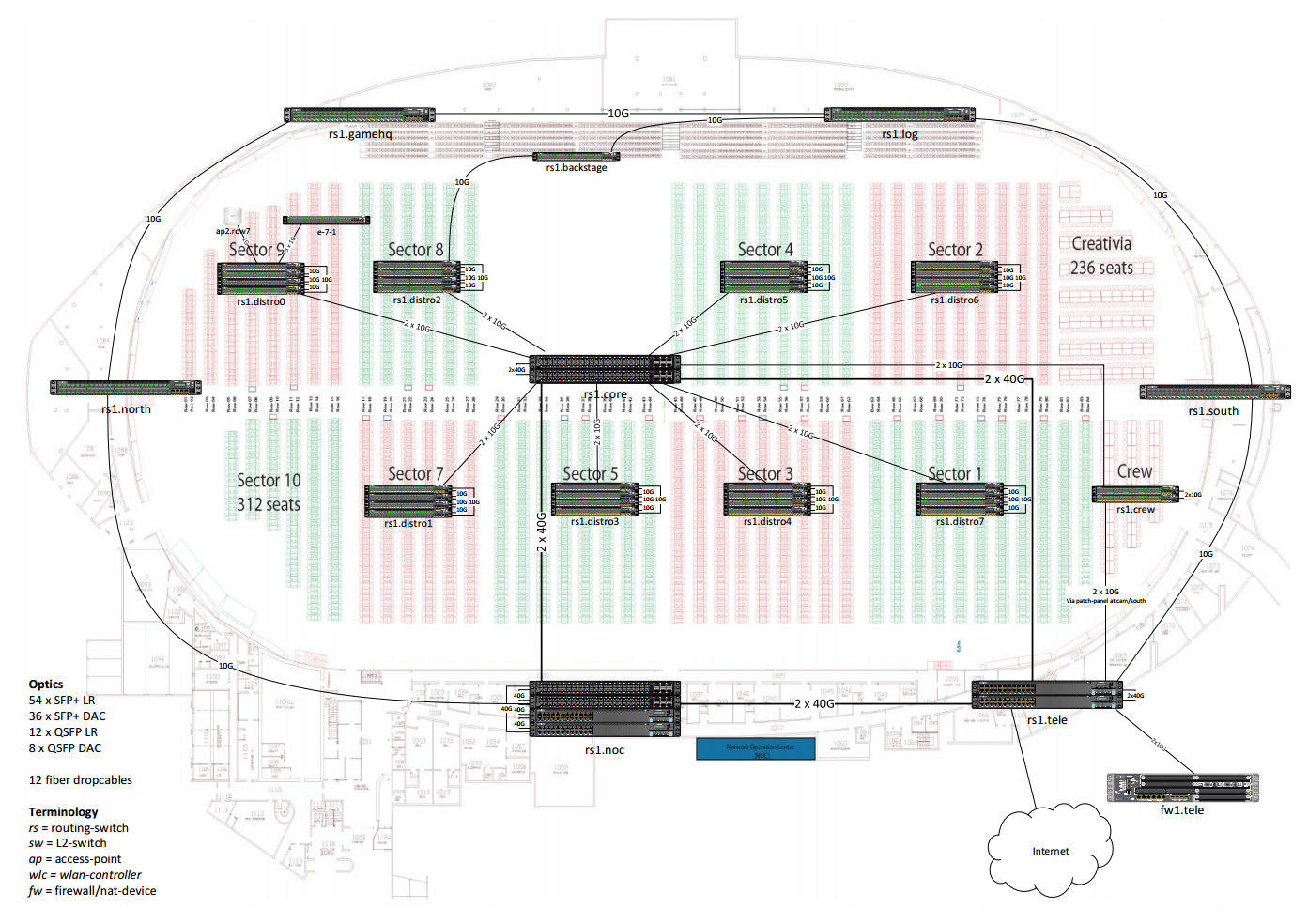

Here is the last revision of the network design:

(we will make the final version in high quality format available after TG).

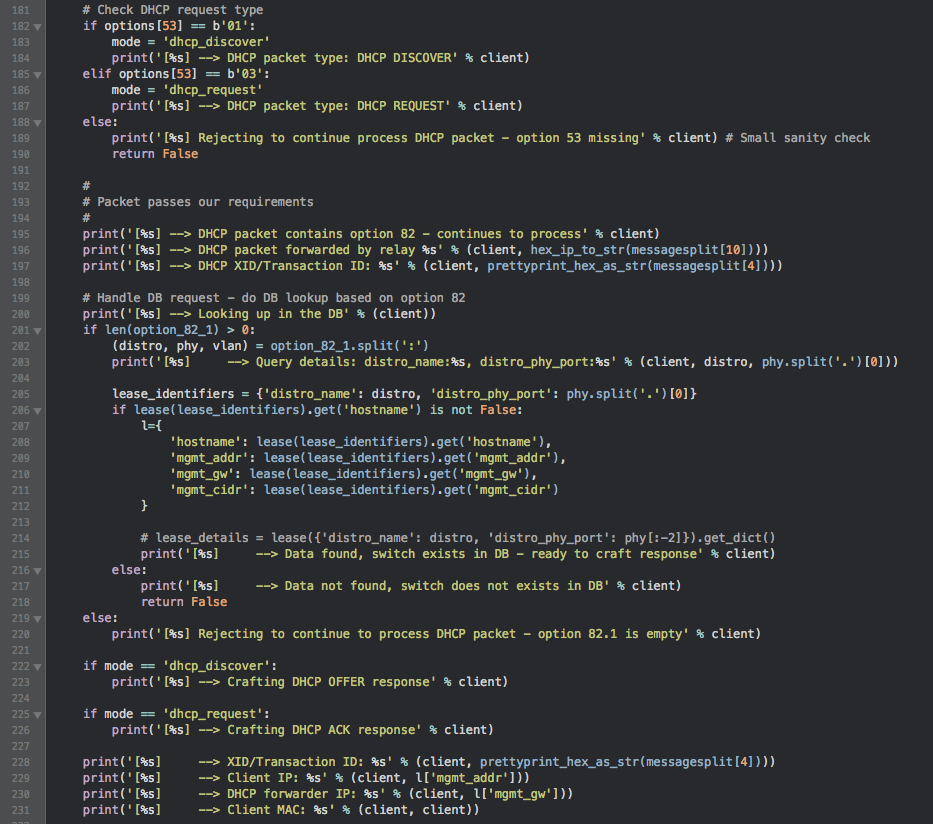

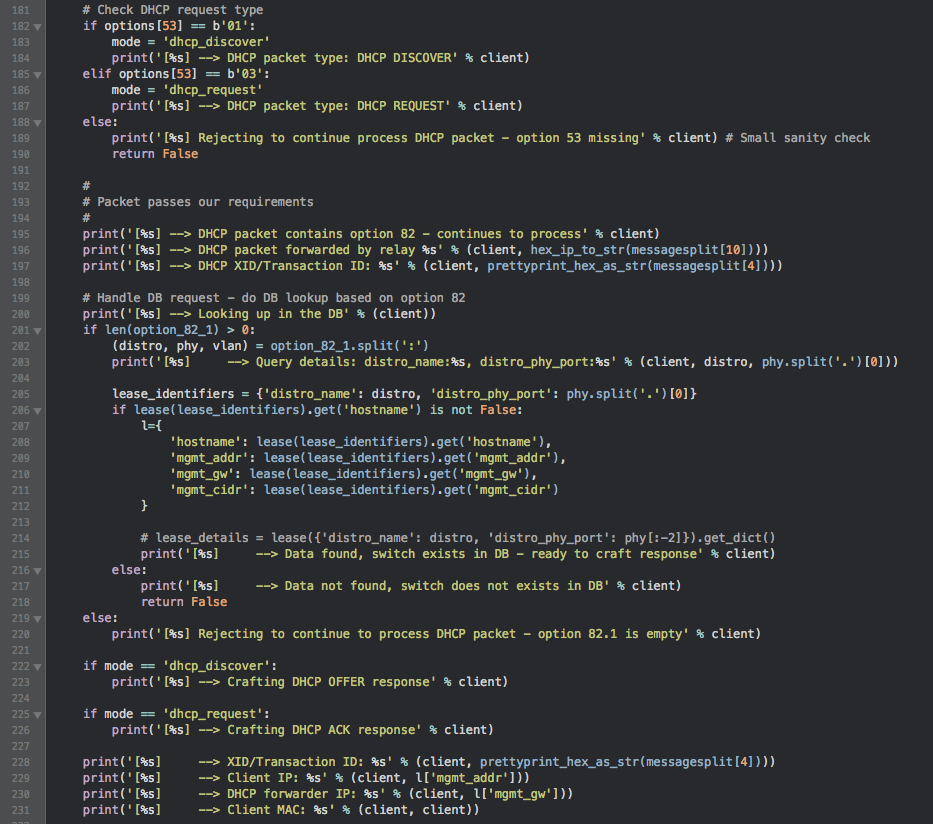

For some years now, The Gathering has utilized different methods for automatic provisioning of the edge switches that the participants connect to. The first iteration of this system was used to configure ZyXel switches, and was called ‘zyxel-ng’. Then, in 2010, The Gathering bought new D-Link edge switches with gigabit ports. New wendor, new configuration methods. ‘dlink-ng'[1] was born. It had lots of ugly hacks and exception handling. This was due to several reasons, but mainly because the D-Link’s wouldn’t take configuration automatically from TFTP/FTP/similar.

Five years had passed. We’d outgrown the number of switches that was bought in 2010, and we needed more. After thorough research and several rounds with RFQ’s, we decided to buy new switches for TG15. We ended up buying Juniper EX2200’s as edge switches. This meant, once again, a new configuration tool. We had this in mind when writing the RFQ, so we already knew what to expect. After some testing, trial and error, we landed on a proof-of-concept. It involves DHCP Option 82, custom-made DHCP-server and some scripts to serve software- and configuration files over HTTP. The name? Fast and Agile Provisioning (FAP)[2].

With this tool, we can connect all the edge switches on-the-fly, and they’ll get the configuration designed for that specific switch (based on what port on the distro they connect to). If the switch doesn’t have the specific software we want it to have, it’ll automatically download this software and install it.

It’s completely automated once set up, and can be kept running during the entire party (so f.ex. if an edge switch fails during the party, we can just replace it with a blank one, at it’ll get the same configuration as the old one).

[1] https://github.com/tech-server/tgmanage/tree/master/examples/historical/dlink-ng

[2] https://github.com/tech-server/tgmanage/tree/master/fap

As The Gathering 2015 draws closer we thought it was about time for an update regarding the network.

We have been in a comprehensive round of evaluation of and purchasing new edge/access switches to replace the D-Link’s that have been the access-switches for the last 5 events. After a lot of planning, meetings, e-mails, more meetings, shortlisting and more meetings – we ended up with choosing nLogic as our main collaborator , and we are happy to announce that TG will be using equipment from Juniper Networks for TG15 and the years to come. nLogic have been very forthcoming and fantastic to work with and we look forward to work with them. nLogic is a consultancy company in Oslo, which happens to be a Juniper Elite Portfolio Partner in Norway.

Most of the equipment have been purchased as part of the deal with nLogic, with very good prices (of course, or we could never have afforded purchasing these cool switches). Thus, the equipment will end up being owned by KANDU/TG, free for us to do what we want with them after the contract ends and we, of course, have paid the bank all its money…

Core:

As core-switches this year we will be using two Juniper QFX5100-48S switches. These high-performance, low-latency switches are based on the Trident 2 chipset and offers 48 x 10G and 6 x 40G interfaces making them ideal to run as core-switches in a network such as ours.

Distribution:

This year we will be running the Juniper EX3300-48P switches in stacks (Virtual-Chassis) of four with 20Gbps uplink to the core-switches (upgradable to 80Gbps if needed). The EX3300-48 comes with 48 x 1G copper and 4 x 10G SFP+ interfaces. Running these switches in a stack will grant us both full redundancy as well as the scalability and speed we need. This switch model will also be used for the backend network in the arena (CamGW, LogGW, etc).

Edge:

For The Gathering 2015 will will be utilizing the EX2200-48T-4G as the edge switches. The EX2200-48 comes with 48 x 1G copper and 4 SFP interfaces and offers a rich feature set ideal for us. Of functionality worth mentioning are; IGMP- and MLD snooping, first-hop security for both IPv4 and IPv6 (IP-source-guard, IPv6-source-guard, DHCP-snooping, DHCPv6-snooping, IPv6 ND-inspection, dynamic ARP-inspection), sFlow, DHCPv4 option 82, DHCPv6 option 17/37, etc.

Other switches:

NocGW and TeleGW this year will consist of stacks of EX4300-24T and QFX5100-48S. This gives us the ideal port-combination of 1G, 10G and 40G and also providing us with a fully redundant 80G (2*40GbE) ring between TeleGW, NocGW and Core.

With the above setup in mind we have designed a network where we can suffer an outage of any single network element without experiencing outage on any critical services.

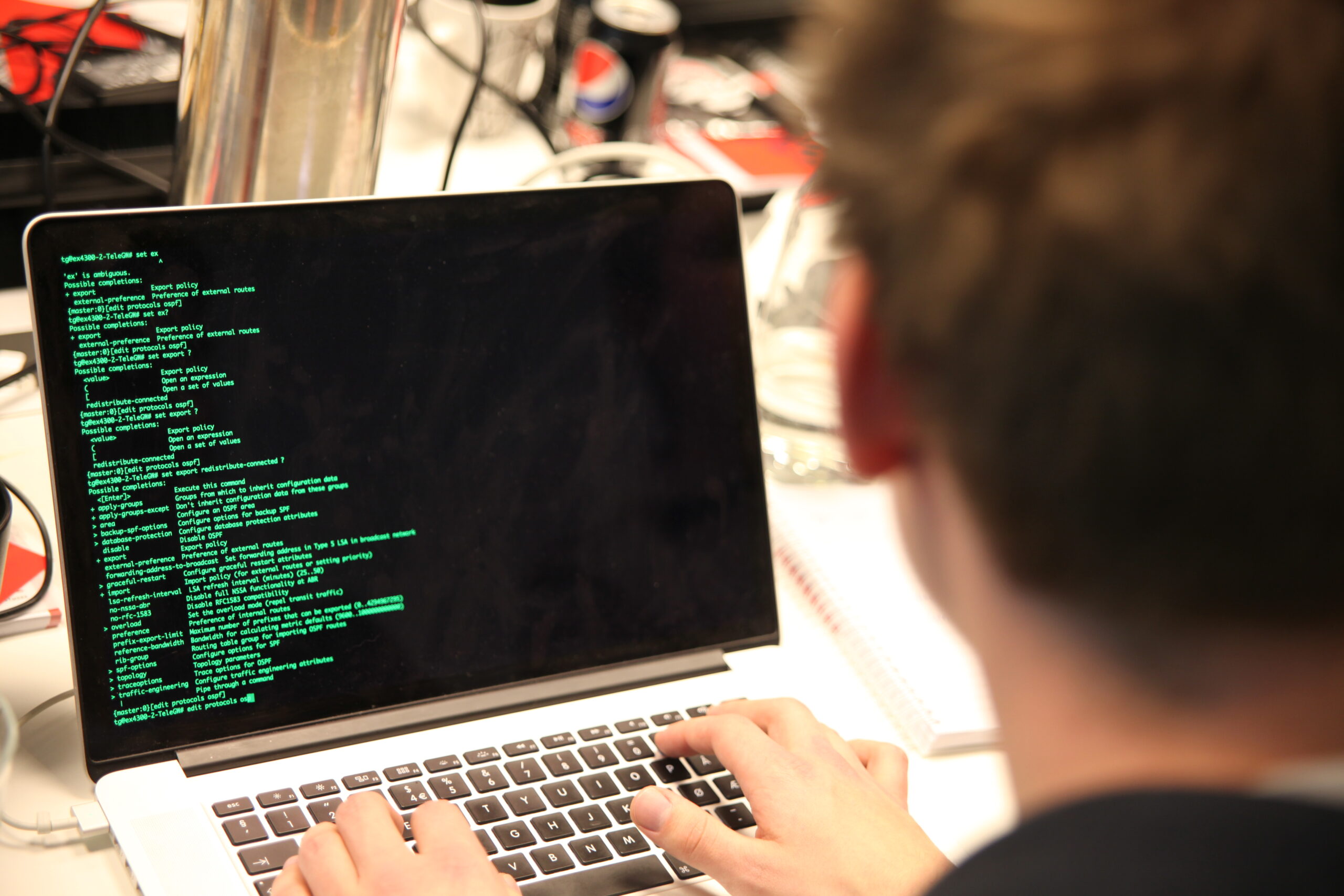

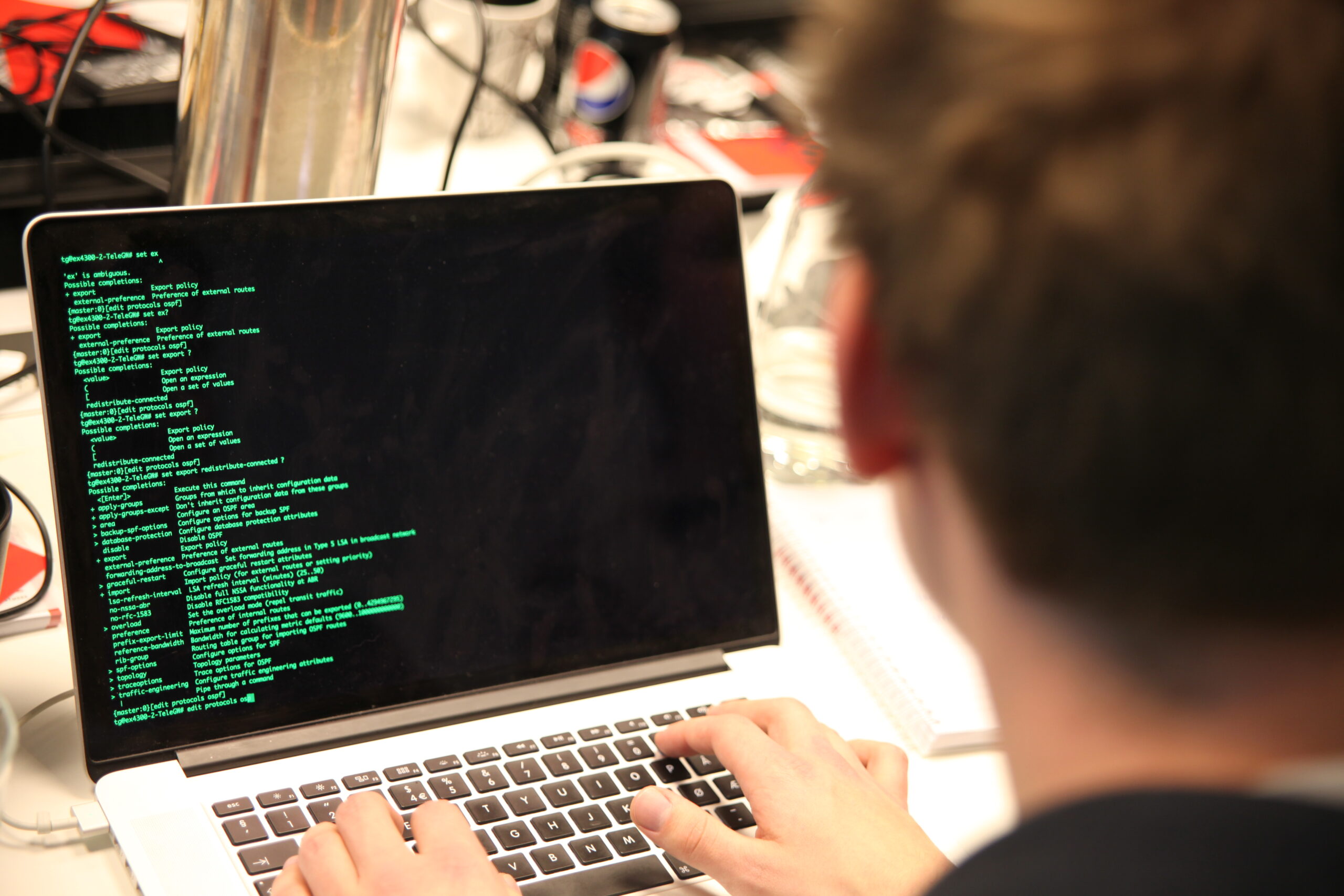

This weekend we have fulfilled one of the Juniper workshops at nLogic, lead by senior network consultant Harald Karlsen, which is in the trail for us in Tech:Net (and some from Tech:Server and Tech:Support) to be prepared for working with Juniper Junos after 10, very good and pleasant, years with Cisco IOS.

**Here are some pictures from the weekend at nLogic (*) :

**

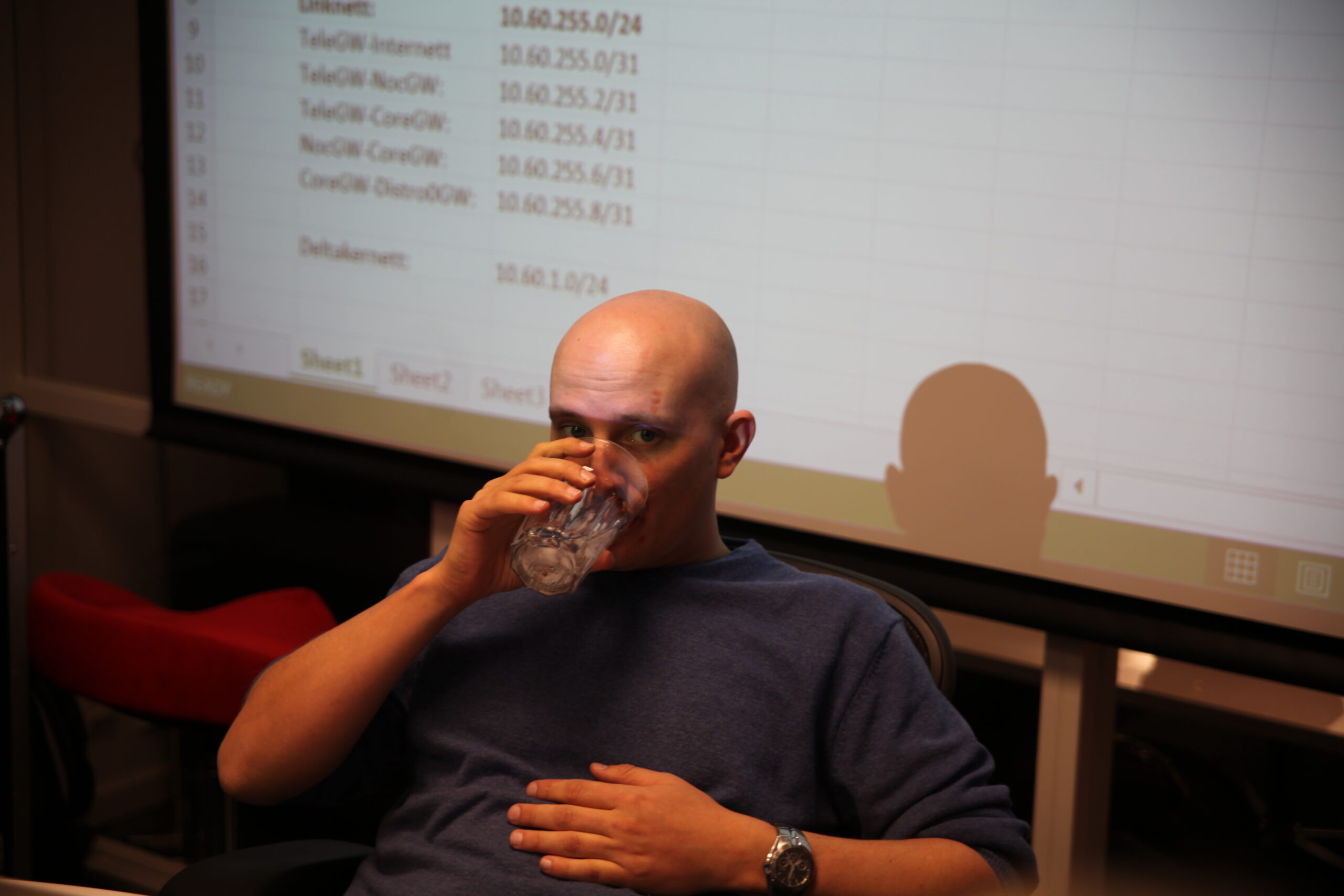

Organizer without his morning coffee is as useless as a switch with no power (?) 😉

The wireless experts wants to learn more about R&S! 🙂

MacGyver making himself ready for making a bomb out of some cables,

a switch and some Junos configuration…

“We do not agree with the teacher! NO! NOT AT ALL!”

“Fresh air. So strong. Must inhale slowly.”

Teacher-Karlsen shows the students the equipment that they will work on…

j- 🙂

Ida 🙂

“This is not at all anything near, in the vicinity or close proximity of Cisco IOS, WHAT?”

They said 10 minutes, chocolates and coffee… and the room emptied in 10 seconds flat…

nLogic heroes! 🙂

The concentration is deep…

And the arguments high…

And low…

And yes, that is the button you press to turn the computer on!

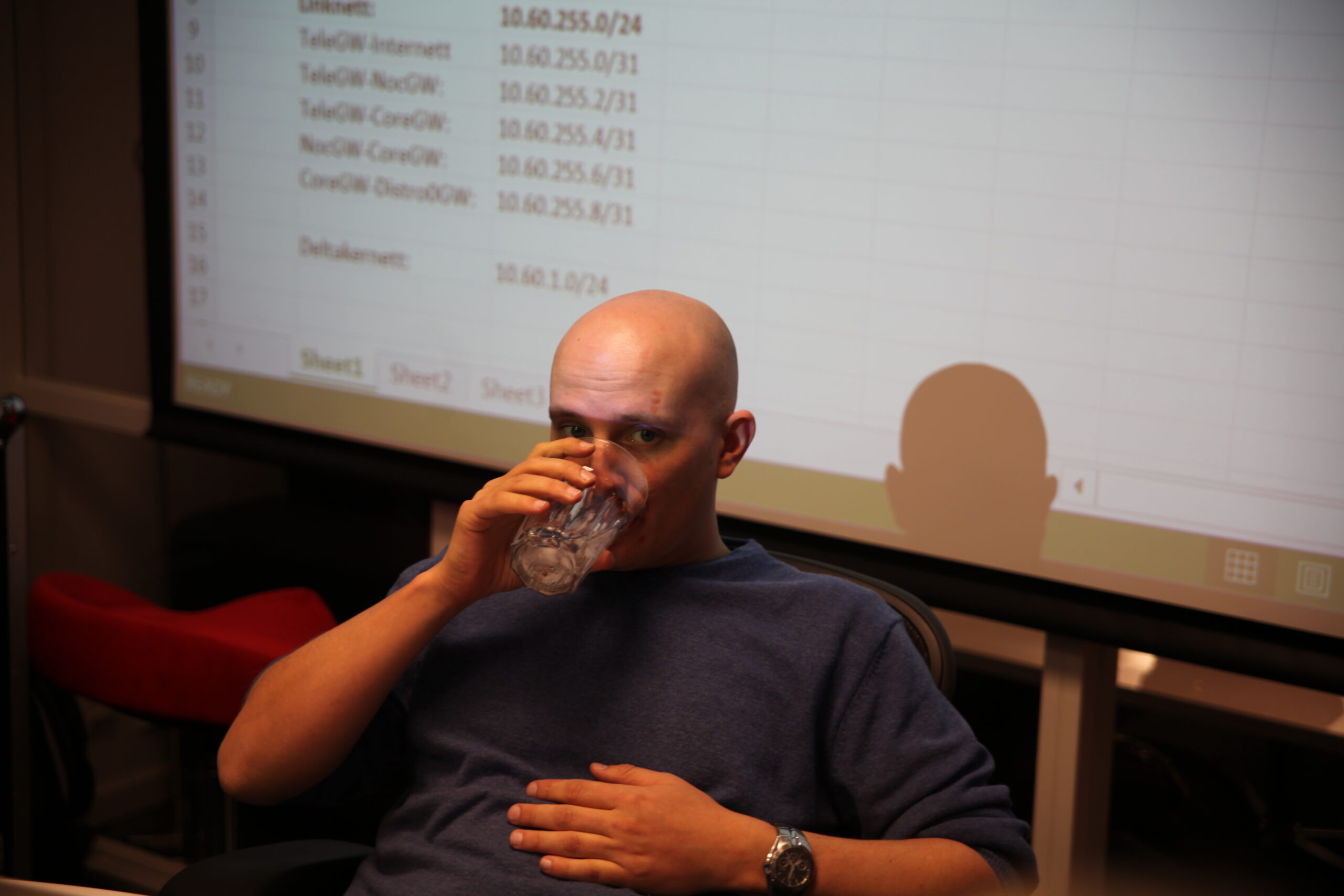

That throat have great need of some beverage…

:Server demonstrates to :Net how to take the network down…

And the concentration is like… BSDeep

So deep that the Organizer had to leave the room…

Which lasted like… 10 minutes?

That good old OSPF!

“I’m not sure if you were supposed to actually delete all the interfaces?”

“Not to worry, I’ll make a restore device out of some paperclips,

a CAT5 cable and an old hard-drive”

(*) All pictures are taken, owned and copyright by Marius Hole – ask before you download them and use them somewhere!

Wannabe er nå åpent for søknader for TG15 og du kan lese her beskrivelsen Net:

http://wannabe.gathering.org/tg15/Crew/Description/Tech:Net

Om dette høres riktig ut for deg, så anbefaler jeg deg å registrere deg i wannabe og levere en søknad: http://wannabe.gathering.org/tg15/

Vi håper å se mange interessante søknader og søkere! 🙂

*Hvem gjør noe for Internett og Fri Programvare i Norge? *

Hør hva Nasjonalt Kompetansesenter for Fri Programvare (friprog.no) gjør for friprog i Norge, og hva Internet Society Norway Chapter (isoc.no) gjør for fremtiden til Internett.

De som stiller opp er

– Christer Gundersen (friprog.no, første 20 min)

– Salve J. Nilsen (isoc.no, siste 20 min)

Some of you may have experienced some problems with the internet, the wireless and the network in general. We have had some minor issues with the internet link, with the internal routing and the wireless. Everything was on track and working before 09 Wednesday morning, but we never really know how well things work before at least a few thousand participants actually arrive and connect to the network and put some load on it.

*The wireless: *

We had some small problems with the servers to start with, and then some small problems with the configuration. The main problem here was that we had to prioritize the cabled network.

We are still working on improving the wireless solution and hope that we have everything optimized by tomorrow morning.

The internal network:

We don’t have one specific problem to point to, more like hundreds of small problems. The list is long and it contains everything from bug in software to missing parts and some human error. But there have not been any major incidents.

The internet, which is a two part problem:

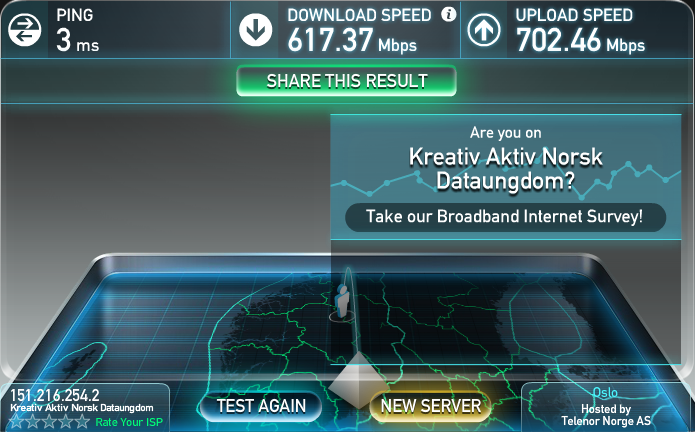

1. We have 4x10Gig links in a port bundle down to Blix Solutions in Oslo. These were connected and tested OK on Friday. When participants arrived on Wednesday and the links became loaded with traffic we started to see problems with the load balancing. We removed two ports that weren’t performing well from the bundle and continued on 100% working 20Gig (2x10Gig).

This morning, around 11:00, SmartOptics arrived with new optical transceivers and converters. They checked the transceivers on the links we had problems with using an optical microscope and could see that they weren’t completely clean. Using special cleaning sauce, they managed to remove the dust and dirt from our transceivers, leaving it to us to put them back in the bundle, now in 100% working condition. Next year we’ll make sure to be more adamant about this before patching things together.

2. Origin, Steam, Blizzard, NRK, Microsoft, HP, Twitch… Some of these services rely on geolocation. There are multiple providers of geolocation service (like MaxMind), but the services usually charge money per database pull. This means that the cheaper the companies are, the longer between every pull. This means that we can be seen as being in Norway for some services that update often, but in Russia, Puerto Rico, Italy or Antarctica etc from companies that pull data from the geolocation database less frequently.

The reason for this is because our IP-address range is a temporary allocation from RIPE. RIPE has a pool with IP-addresses they lend out for a short amount of time to temporary events. This means that we are not guaranteed to get the same IP-addresses every year and that a lot of different events in different countries have been using the allocation in the months before us.

We are working continuously to solve this. We talk to Origin/EA and Valve, we try to NAT the most known and most used services through permanent Norwegian IP-addresses and we do ugly DNS-hacks. The sad fact is however that in the limited amount of time we have during TG, we won’t be able to solve this for every service.

5GHz… Connect to the broadcasted ESSID: “The Gathering 2014” <- this one is only 5GHz and you are 100% surest to getest the bestest and freshestest frequencies. YAY!! 😉

Legacy clients with only 2,4GHz can connect to the “The Gathering 2014 2.4Ghz” ESSID.

2,4GHz is only best effort – the main focus is stable 5GHz

The password for both is: Transylvania

N.B. with capital T

Woot oO \:D/

TeleGW#sh int po 1

Port-channel1 is up, line protocol is up (connected)

Hardware is EtherChannel, address is 001a.e316.a400 (bia 001a.e316.a400)

Description: Interwebz

Internet address is 185.12.59.2/30

MTU 1500 bytes, BW 40000000 Kbit, DLY 10 usec,

reliability 255/255, txload 1/255, rxload 1/255

Encapsulation ARPA, loopback not set

Keepalive set (10 sec)

Full-duplex, 10Gb/s, media type is unknown

input flow-control is on, output flow-control is off

Members in this channel: Te5/4 Te5/5 Te6/4 Te6/5

TeleGW#show ipv6 interfac po1

Port-channel1 is up, line protocol is up

IPv6 is enabled, link-local address is FE80::21A:E3FF:FE16:A400

No Virtual link-local address(es):

Description: Interwebz

Global unicast address(es):

2A02:ED01::2, subnet is 2A02:ED01::/64

Some numbers from the Cisco kitlist:

5 4507R+E switches with redundant supervisors

166 wireless access points (mix of mainly 3602 and 2602, aswell as 3502 and a few 1142)

2 5508 wireless controllers

7 4948E switches

2 4500-X switches

2 6500 switches

150+ optical 10G transceivers

Asle, a guy who is very interested in networking and maybe more specific – the TG network, made this summary of the TG network (primary network vendor).

He have been crawling the internet up and down to locate as much accurate info about the core network as possible.

Check it out here: http://leknes.info/tgnett

There’s been a lot of questions around the instability in the network at TG13. We are very sorry for not explaining this during TG13 or any time sooner then this. We are very, very sorry. But behold, the explanation is here… and I can say it in one short sentence:

We had a defective backplane on one of the two supervisor slots of the 6500 that terminated the internet connection.

First a short intro to Cisco, Catalyst 6500, sturdiness and reliability: the Cisco Catalyst 6500 is a modular switch that have been in production since 1999. It’s packed with functionality and is one of the most reliable modular switches as we know it.

You may ask, why did we end up with a defective one if they are so reliable… and you are right to ask. The answer is split in two… one: they have produced and sold many, many thousands of units – and even if they have very strict test routines there will always be some troublesome units. Two: this type of equipment is not meant to be shipped and moved back and forth as much as the Cisco Demo Depot equipment is. In the end the equipment will show problems caused by all the moving back and forth, not to mention the line cards always replaced.

We would have run the equipment in extensible testing, if we were a regular customer. And as a regular customer with a service agreement would we have contacted the Cisco TAC (Technical Assistance Center) and registered a service request. They would have either solved the problem remote if it were software bug or configuration error or RMA (return material authorization) the faulty hardware. This takes time, and that is something we usually calculate for when building a new, big network.

At TG we test all the equipment before we ship it to Vikingskipet. On the 6500 and 4500 we run the command “diagnostic bootup level complete”, and reboot the switches. When they are up again we have a complete diagnostic of all the line cards of the switch – and we can easily spot if something is wrong. Both our 6500 last year passed the tests, so we shipped them up to Vikingskipet and configured them and everything was seemingly ok.

But not everything was ok. Not at all. We noticed something weird on Wednesday after the participants arrived. We had some strange latency and loss on the link between us and Blix Solutions. The interfaces did not have drops or errors, we just saw that more packets went out than return packets. We involved first Blix Solutions in the investigation, and they checked everything at their end and everything was ok there, so we involved Eidsiva bredbånd. They checked everything between Blix Solutions and us, and everything was fine also there.

This started to get stranger and stranger… Traffic was going out, but didn’t come back. No interface drops. No interface errors. Peculiar.

The setup was quite simple… 4x10Gig interfaces in a port-channel bundle towards Blix Solutions. 2x10Gig interface (X2) in each supervisor (Sup720).

So after some testing and some different hypothesis during Wednesday, we waited until the day was over and we went towards the Thursday morning and the traffic was dropped beneath 8Gig. At once it was stable beneath 8Gig, we forced all the traffic over to one and one 10Gig interfaces – first in the top most supervisor. The traffic was going smooth as silk, and I’m very sorry to say – but we did actually fail them over, one by one, to the 10Gig interfaces on the last supervisor for five minutes – and guess what? The internet traffic had drops and we have latency… We forced them back to the first supervisor and everything went smooth again.

We had an answer for why we had the problems, but then we had to find the solution to solve the problem on the fly, in production, before 6000 nerds woke up to life and started using internet traffic again! OMG! WHAT A PRESSURE! oO

As you may know are the transport between Vikingskipet and Blix Solutions “colored”, and we only had colored optical transceivers in the SFP+ format and SFP+ converter in X2 format. The rest of the 10Gig interfaces on the 6500 was XENPAK.

Luckily for us, Blix Solutions had put a TransPacket-box in each side, and this box can be configured with the right wavelengths (colors) on the interfaces. That gave us the possibility to translate the wavelength through the TransPacket-box and receive on a 10Gig XENPAK interface on regular 1310 single mode wavelength.

But this was not until a little bit out into the day, Thursday. So all instability you may have experienced on Wednesday and “early” Thursday was caused by this. After this, there should not have been any troubles or instabilities in the network as far as we know.

At the same time as we explain this we also have to applaud both Eidsiva bredbånd and Blix Solutions for their service. They were working with us every step of the way to find a solution. And we can really say we know now why Blix has the name they have… Blix Solutions – because they are very open and forward about finding a solution, however far fetched or unrealistic it is.

Thank you both Eidsiva bredbånd and Blix Solutions for the cooperation in TG13!

We really look forward to working with both of you for TG14 🙂