The party is well underway, and I was dumb enough to say aloud the phrase “We should probably blog something?”. Everyone agreed, and thus told me to do it. Damn it.

Anyway, things are going disturbingly well. We were done with our setup 24 hours ahead of time, more or less. I’ve had the special honor of being the first to get a valid DHCP lease in the NOC and the first to get a proper DHCP lease “on the floor”. And I’ve zeroized the entire west side of the ship (e.g.: reset the switches to get them to request proper configuration, this involved physically walking to each switch with a console cable and laptop).

But we have had some minor issues.

First, which you might have picked up, we have to tickle the edge switches a bit to get them to request configuration. This cost us a couple of hours of delay during the setup. And it means that whenever we get a power failure, our edge switches boot up in a useless state and we have to poke them with a console cable. We’ve been trying to improve this situation, but it’s not really a disaster.

We’ve also had some CPU issues on our distribution switches. Mainly whenever we power on all the edge switches. To reduce the load, we disabled LACP – the protocol used to control how the three uplinks to each edge switch is combined into a single link. This worked great, until we ran into the next problem.

The next problem was a crash on one of the EX3300 switches that make up a distribution switch (each distribution switch has 3 EX3300 switches in a virtual chassis). We’re working with Juniper on the root cause of these crashes (we’ve had at least 4 so far as I am aware). A single member in a VC crashing shouldn’t be a big deal. At worst, we could get about a minute or two of down-time on that single distribution switch before the two remaining members take over the functionality.

However, since we hade disabled LACP earlier, that caused some trobule: The link between the core router and the distribution switch didn’t come back up again because that’s a job for LACP. This happened to distro7 on wednesday. We were able to bring distro7 up again quite fast regardless, even with a member missing.

After that, we re-enabled LACP on all distribution switches, which was the cause of the (very short) network outage on wednesday across the entire site.

Other than that, there is little to report. On my side, being in charge of monitoring and tooling (e.g.: Gondul), the biggest challenge is the ring now being a single virtual chassis making it trickier to measure the individual members. And the fact that graphite-api has completely broken down.

Oh, and we’ve had to move our SRX firewall, because it was getting far too hot… more on that later?

This time, there were no funny pictures though!

This year our wireless seems to be alot more stable then ever before.

Unfortunately i’m not much of a wireless-guy myself, so i can’t go into all the details, but i just felt like writing a small post about our wireless anyway.

This year we have Fortinet onboard to provide us with equipment for the wireless network.

The equipment we use consists of a total of 162 FortiAP U421EV (deployed) and 2 FortiWLC-3000D.

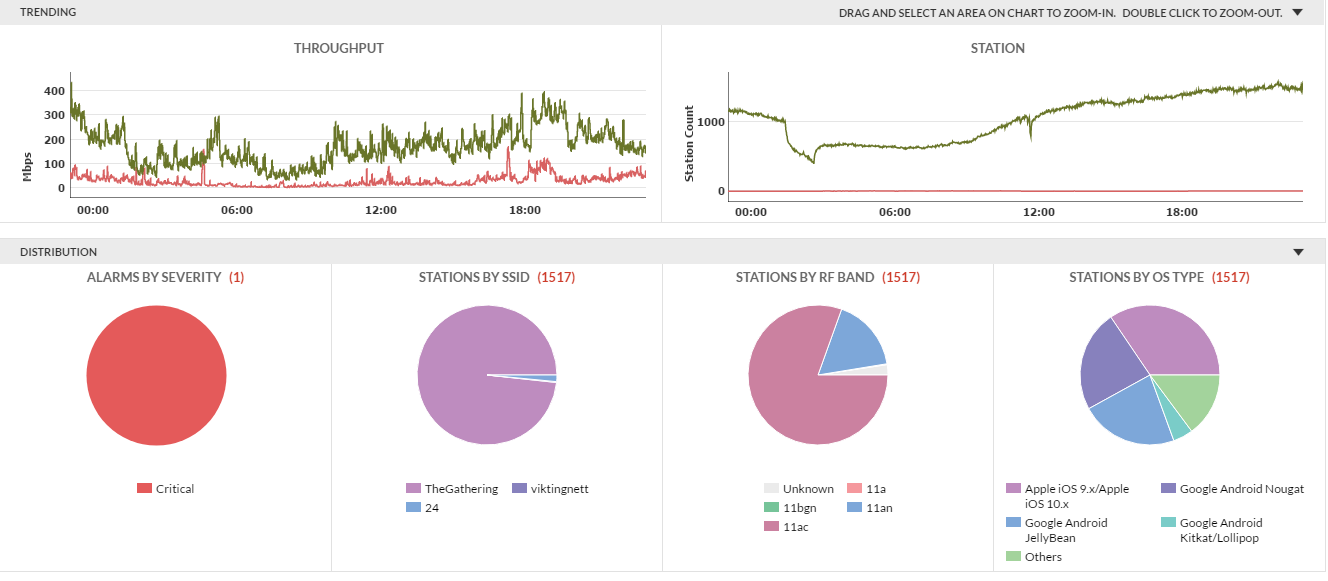

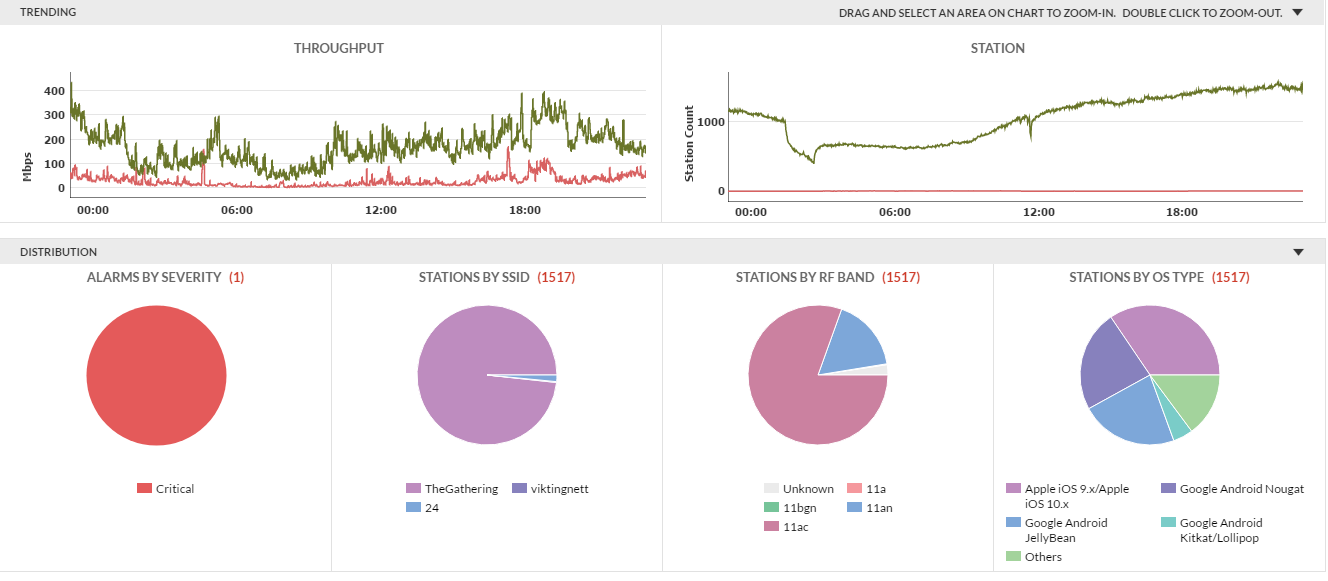

As of right now we have 1517 clients, where 1487 of them are on 5GHz and 30 on 2.4GHz.

The 2.4GHz SSID is hidden and mostly used for equipment that don’t support 5GHz, like PS4 for example.

We have performed some testing by roaming around the vikingship and got an average of 20ms latency and 35-ish Mbps. Our Wireless guy almost managed to watch an entire episode on Netflix without major disruption while walking around!

The accesspoints are spread evenly across the Vikingship.

We have one accesspoint at the end of every second row on each side of the vikingship, as well as one accesspoint per row on each side.

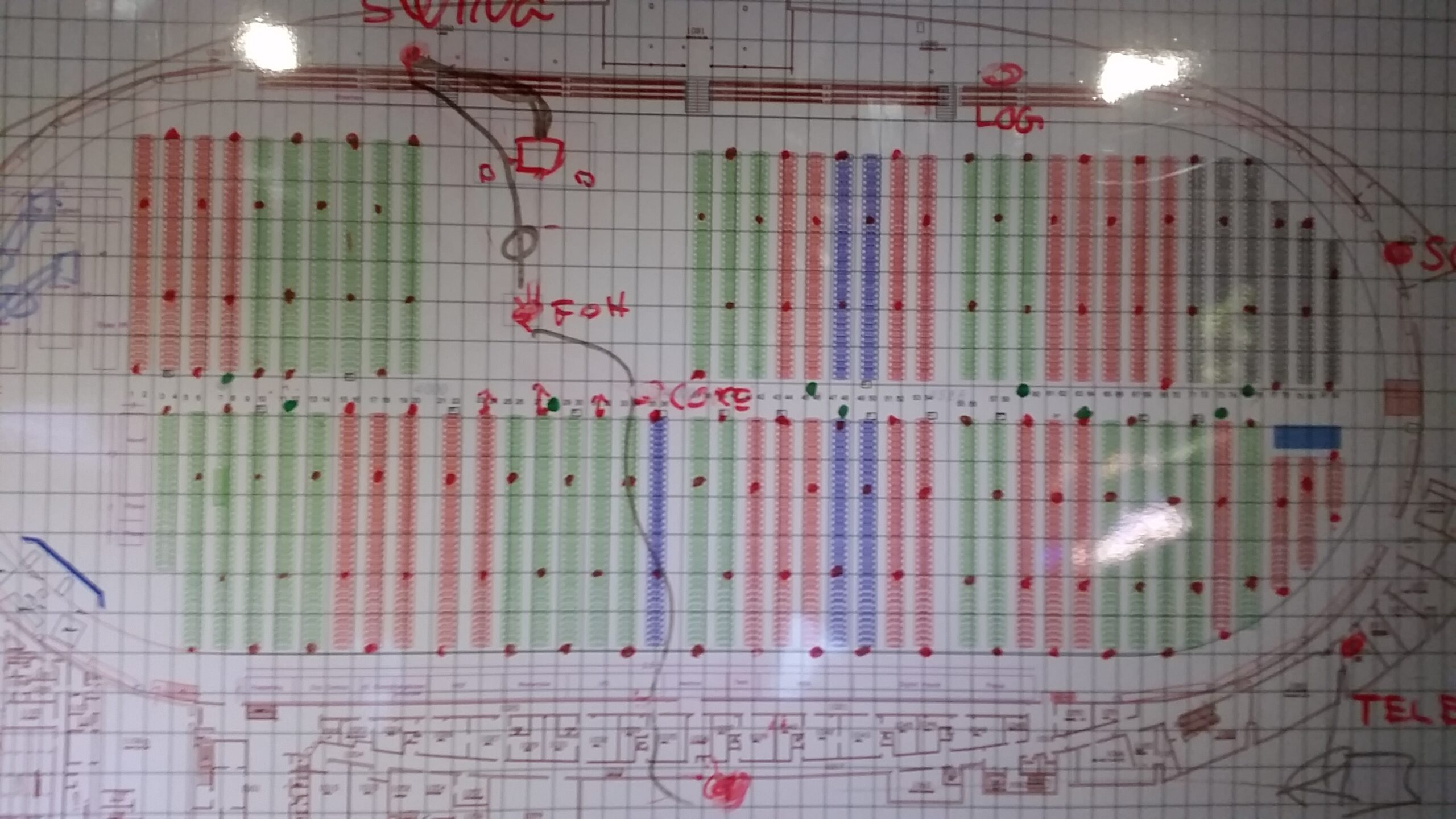

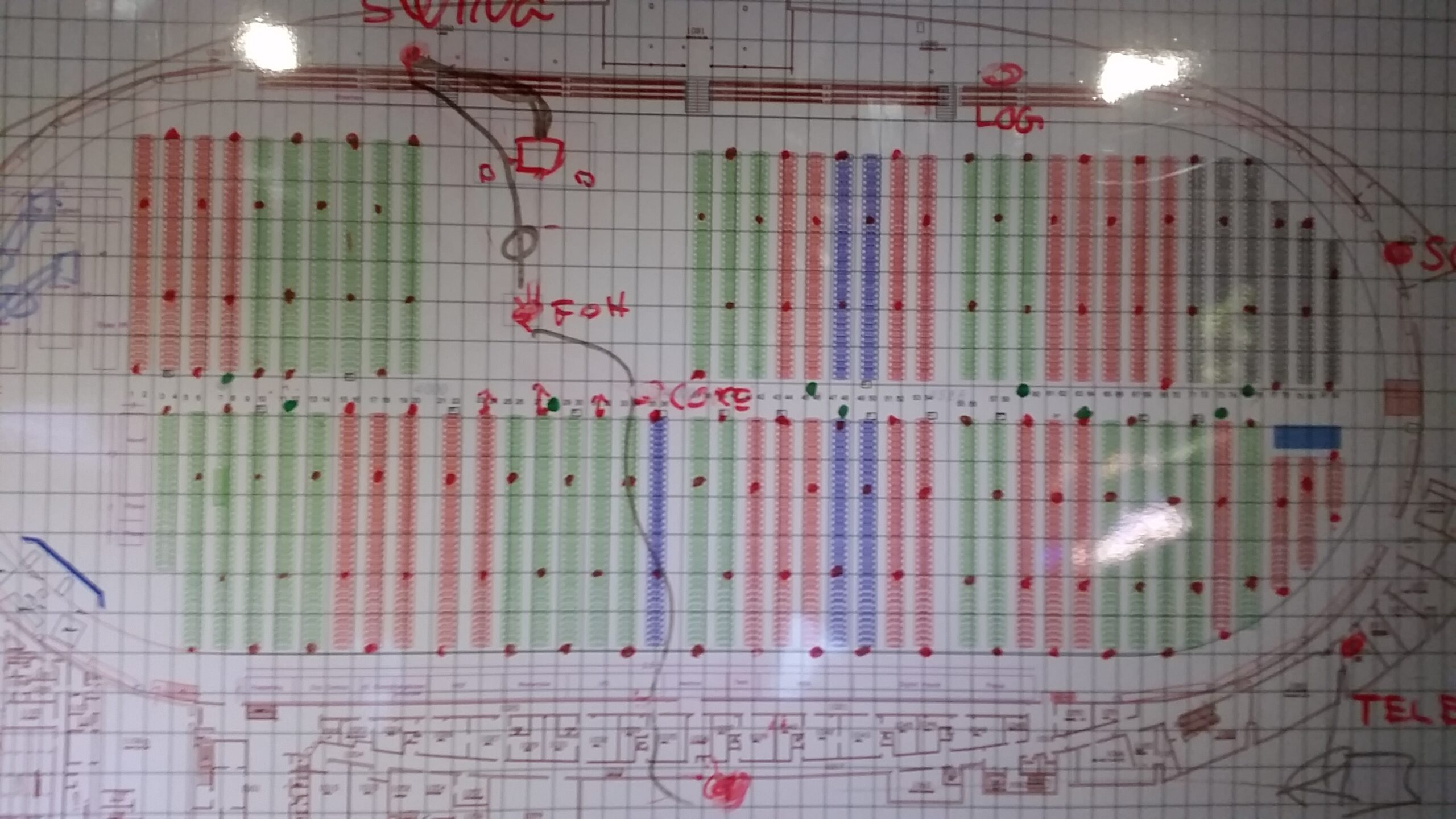

Well.. it’s kinda hard to explain in words, so have a low-quality picture from my phone of our drawing board in the NOC:

The red dots across the tables represents the AP placements in the main area of the vikingship.

Below you can see a map with the AP placement from our wireless controller.

Well.. as i said i’m not much of a wireless guy, but i hope you got some interesting information about how our wireless is deployed this year.

If you have any questions regarding Wireless at TG17, feel free to contact us on the official discord server for The Gathering, channel #tech 🙂

And meanwhile, we are on the lookout for trouble.

Our lights in front of the NOC has turned green!

All edgeswitches are now up and running.

We will perform some tweaks and do a DHCP-run shortly.

See you soon!

Glenn is amazed by the servers. Or lights. Or both. To much coffee?

Thank you Nextron, for blazing fast servers!

Photo: Joachim Tingvold

It’s Saturday. On Wednesday 5000 people storm the fort. Or in our case, the Viking Ship.

And the good news is, I can now sit in the NOC and enjoy moving pictures of cute animals.

On a slightly more serious note:

We’ve got the internet uplink up (a week ago).

We’ve got net in the NOC.

The ring is up.

We’re setting up a distro-switch to test a potential issue we might have to work around.

Our server rack is mostly up.

And we’ve delivered the first few access nets to the various crews that need it to get work done.

We still have quite a bit of stuff to do, but we’re (slightly) ahead of schedule. To get an idea of what’s left, here are some (!) of our access switches, still in their boxes.

A few meters worth of fiber cable.

And with one last image, I’m signing off and going back to my cat picture. I mean back to work.

See you soon!

Some of you might have noticed some instability on https://gathering.org tonight (Wednesday April 5th, 2017).

Ok, that was me. My bad.

It all started innocently enough. “Why don’t you set up SSL on gathering.org? It’ll only take 20 minutes!”. Ah, well, no, as it turns out, it didn’t take 20 minutes. As I knew it wouldn’t.

We put up SSL weeks (months?) ago, using let’s encrypt and whatnot. It was reasonably straight forward, but it revealed a whole lot of issues that has taken us great deal of time to find and fix. At its core most of the issues are simple: Hard-coded links to paths using http. But finding these hard-coded references hasn’t always been easy. Gathering.org isn’t just a plain CMS, it is a django (python) site that also has static content hosted by apache, a php-component to control the front page (hosted on a different domain), a node.js component to control locking on said php-component (…) and god knows what. And it integrates with wannabe for authentication.

But that was weeks ago. So what happened tonight?

In front of gathering.org there’s a proxy, Varnish Cache, that caches content and makes sure that you spamming F5 doesn’t bring the site down. Yours truly happen to be a varnish developer, but Varnish was in use long before I arrived.

Varnish, however, does not deal with SSL, it just does HTTP caching. So to get SSL we do:

Client –[ssl]–> Apache –[http]–> Varnish –> Apache –> (gunicorn/files/etc)

But I managed to mess it up moths ago, and we ended up with:

Client –[ssl]–> Apache –> (gunicorn/files/etc)

Which works. Until you get traffic. And we predict some traffic spikes next week. So I went about fixing it.

But alas, the gathering.org site is a steaming pile of legacy shit (this is a technical term). And I met resistance every step along the way. So what I ended up doing was quite literally saying “fuck it” out loud, then delete the entire Varnish configuration, rebuild it from scratch, then bypass apache when possible, delete most of the apache config, then establish an archive site for old content. This was not how I had planned it, but it meant some quick improvising. Normally, this is a process you plan out for weeks. I did it in … err. a couple of hours. Hooray?

So now we have:

Client –[ssl]–> Apache –[http]–> Varnish –> Gunicorn

and

Client –[ssl]–> Apache –[http]–> Varnish –> Apache (Static files, etc)

And archive.gathering.org – which I also had to do some quick fixes on.

This meant fixing stuff in Apache, in Varnish, in DNS (bind – setting up archive.gathering.org), debugging cross-site-request-forgery modules in django, cache invalidation issues for editorial staff, running a regular expression over 10GB of archived websites reaching back to 1996, etc etc. Probably lots more too.

By the time I was done, someone was ready to put an awesome “work in progress” graphic on the site.

The Tech-crew meetup planned during The Gathering 2017 is starting to take shape.

A brief summary of what it is: a social event for people who do tech-related work at computer parties. See the original invite for details.

So far this is what I know:

Time: Friday, 18:00, location disclosed to those who are invited (which is anyone who drop me an e-mail).

I’ve gotten 9 signups, totaling 16+ people, not counting myself or whoever from Tech:Net at TG is available. All together more than 10 different parties are represented, spanning all sizes.

And there’s still room for lots and lots more. So if you volunteer at a computer party or similar event in a technical capacity and want to hangout, drop me an e-mail at kly@gathering.org and I’ll add you to the list (Please let me know what party(or parties) and roughly size, and if it’s just you or if you bring a friend (or friends)).

The agenda is pretty hazy. I figure this is what we do:

-

I say hello I suppose.

-

Go around the room, everyone presents them self and tell us a little bit about what party (or parties) they volunteer for. Nice things to include is size, where you get your stuff from (rent? borrow? steal? “bring your own device”?), what you do for internet, special considerations, or really whatever comes to mind.

-

????

-

????

What I want to avoid is that this becomes a “Tech:Net at The Gathering tells you how to do stuff”-thing. We’re represented, and we’ll obviously talk about whatever, but we’re all there as equal participants. Many of the challenges we have with 5000 participants is irrelevant to most of you, yet smaller parties have challenges and opportunities that are just as interesting to discuss.

If you want to do a small presentation, or want to talk about a specific topic, then let me know and we’ll make room for it.

Topics I might suggest to get things started:

(X) Do you do end-user support? How much/little?

(X) How do you get a deal with an ISP? Do you have someone you can call if the uplink goes down at 2AM?

(X) Do you use subnetting at all? If so: What was the breaking point where it became necessary?

(X) Where do you get equipment from?

(X) Firewalling? Either voluntary or involuntary (e.g.: getting internet through filtered school network)

(X) Public addresses or NAT?

(X) Do you provide IPv6? Do you care? Do you want to?

(X) ?????

We have the room until we’re done basically. and there’ll be some type of food. If there aren’t too many of us, there might be time for a unique guided tour too, but I’m not making any promises (remember: I’m lazy).

Update: We’re now up to 23 “confirmed” signups representing at least 13 different events. And we’ve secured food, courtesy of KANDU.

All your base are belong to us!

We will have a slightly higher access point density this year compared to TG16. While it might make sense on paper to introduce more APs we seem to forget how much work it actually is to prepare them in such large quantities…

Earlier today we unboxed 276(!) base stations/access points and prepared them for for their journey to Vikingskipet, Hamar.

A big thank you to Avantis for lending us their facilities!

The beacons are lit!

We are happy to report that the internet connection for TG17 is up and running.

Tech:Net decided to take the “pre-TG” preparations one step further this year by building our backbone network and installing our DHCP/DNS servers a week before schedule! This gives us the opportunity to tweak and polish all the nuts and bolts of our most critical infrastructure without being on site.

What does this mean for us? It means that we’re able to deploy and provision our edge switches from day 1 without waiting for internet access or the DHCP/DNS-servers to be installed on the first day.

Pretty sweet!

Stay tuned – we will post details about our network design later on..